This week we have been learning about AI, the use of AI – or any technology – in the context of learning, and exploring AI tools themselves. Leading into this module I was quite excited as I interact with AI daily – first hand I have experienced the benefits that this technology can bring, as well as the potential pitfalls – and I was curious to hear about what the literature surrounding AI (in the context of learning) had to say. Truth be told I expected there to be a lot of grim takes on AI and much of the opinions surrounding it to be coloured/biased quite negatively – pleasantly though I was surprised by extremely reasonable and agreeable views on AI that voiced much of my feelings towards AI’s positive traits, as well as its many negative ones.

Please let me know what you think about my ideas surrounding AI. Don’t be afraid to pick and prod at what I have had to say! Also, let me know how you find the format of this blog – if its a huge miss leave a comment absolutely ridiculing me! (Don’t really though just tell me how I could improve it and/or workshop it!) 😊

My use of AI leading into this week:

Much like other computer science students and hobbyist programmers I too have experimented with AI.

First hand I have done the sacrilegious act of making a product solely with the use of AI and endured the painstaking copy/paste sessions with ChatGPT in order to get it working, and I would recommend everyone try this at least once. It will show you the limitations of this technology, and how detrimental it can be to your learning experience as you will walk away with no real working knowledge of what makes this product function. I’d also wager that you will have to just copy/paste the product back into ChatGPT when the inevitable bug arises and you have no clue how to deal with it. Yet, I still use it daily – I just don’t use it to code, rather I use it to teach me how to code, or teach me anything in general.

I feed it ideas/concepts of programming ranging from explaining types in a specific programming language to fundamental theories that the field itself is built on and then I interact with what it has said. I never trust anything it puts out for me, but I don’t distrust it either – I treat it as something I heard in passing from a colleague/peer and research its validity myself. I then further probe and question the AI tool to try and expand my understanding of the concept rather than just get answers out from it.

I have found that using ChatGPT in this sense has made me learn concepts much quicker than my peers.

Ethics: What ethical concerns do you have (or not have) about the use of some of these tools?

ChatGPT came onto the scene quite aggressively and quickly – so quickly in fact that many consumers forgot to stop and think about where and how it was generating much of its content from. ChatGPT (and other AI tools like it) have to have a database of information to ingest and learn from. Anything that comes from ChatGPT therefore isn’t really anything created, rather it’s an amalgamation of data it has processed and pieced together. Despite this, tools like ChatGPT are treated as if they have generated much of this content themselves with no tip of the cap being sent the way of the original artists, authors, programmers… etc that ChatGPT pieced together to spit something out. When you begin to consider that OpenAI is pricing out their service (not so open of OpenAI), it makes you wonder where are the original creators of this data getting considered at all? Where are there financial kickbacks? Where is their recognition?

Many people use online AI tools without a care or thought given to what happens to the prompt they have provided to the service they have chosen to use. It is often ambiguous what happens with this prompt. Is stored/used to train the model further? Is any sanitation for personal/sensitive data that it may contain? Who has rights to the intellectual property of the prompt itself?

What about people who look for advice through AI tools are often misguided or given life threatening advice? Who is held responsible for this potentially destructive advice being given? What is someone were to harm themselves using these tools in a fragile state?

DALLE-3 Images Fed to Sketch

So, I was a bit curious and was wondering what Joe Camel from Camel cigarettes would look like as a cat, so I asked DALLE-3 to help this become a reality.

Fig 1

Not really satisfied with the result, I provoked DALLE to do better…

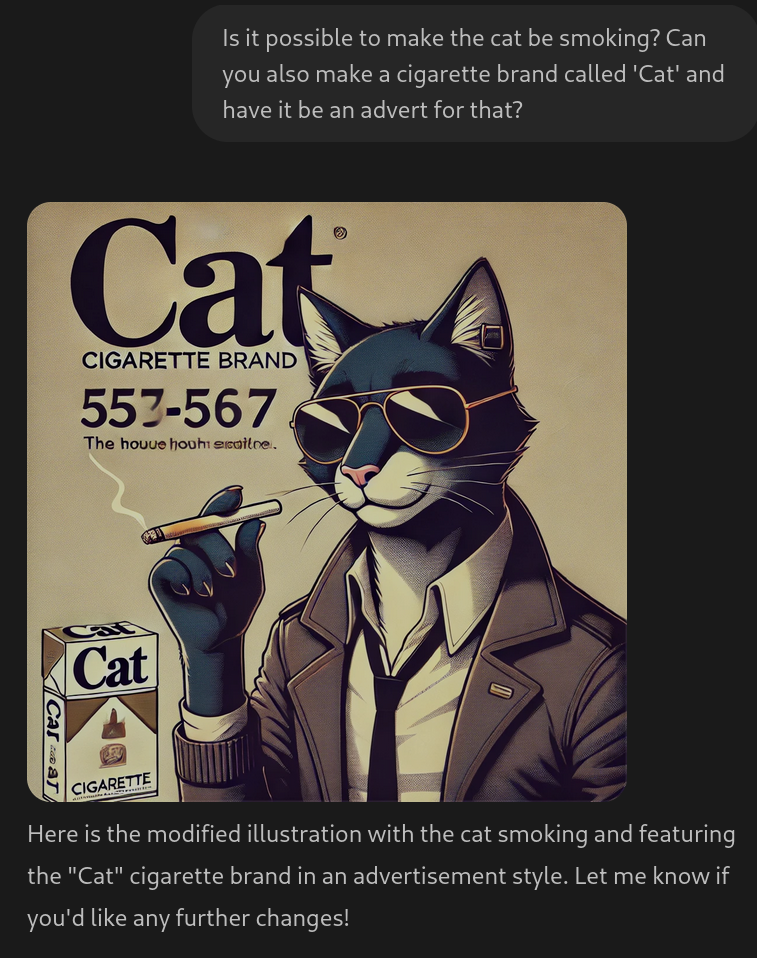

Fig 2

Then curiosity killed the cat when I took this image from DALLE and fed it into sketch…

Fig 3

I guess the world really doesn’t need Joe Cat the smoking cat from Cat cigarettes… come to think of it, the world probably didn’t need Joe Camel either.

SAMR Analysis: ChatGPT on Gemini

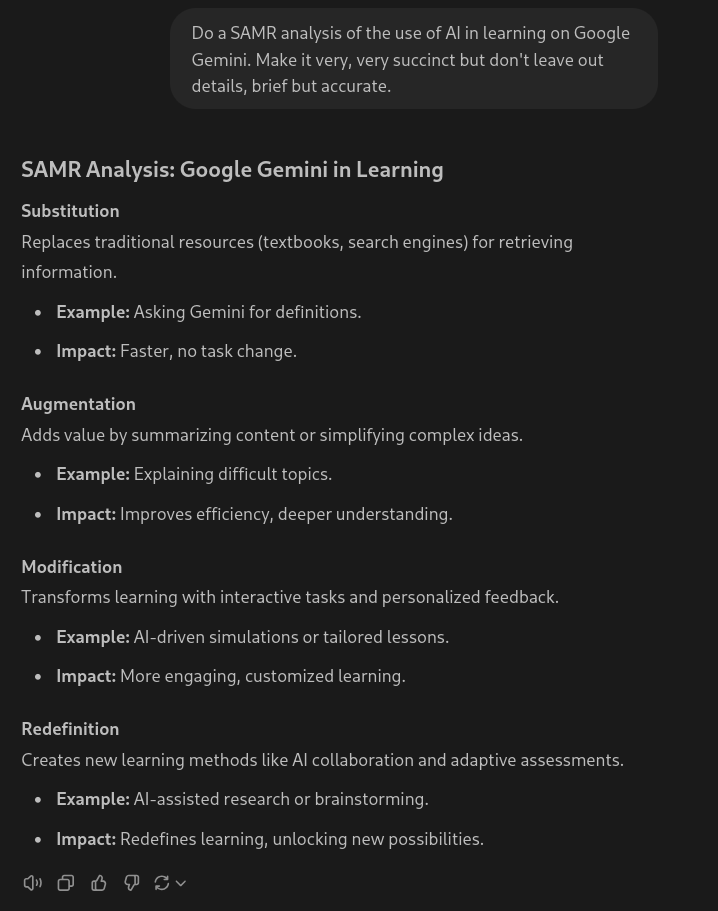

I prompted ChatGPT to do a SAMR Analysis on Gemini…

Fig. 4

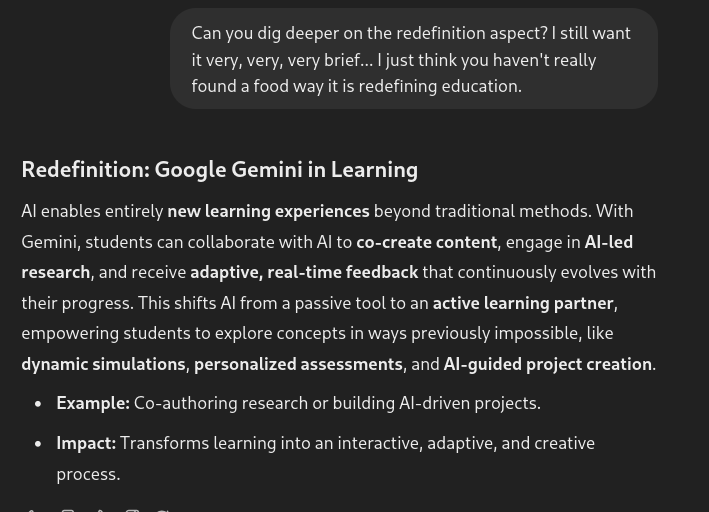

In the analysis I felt that ChatGPT struggled the most with the Redefinition aspect of the analysis… so I further prompted it to dig deep!

Fig. 5

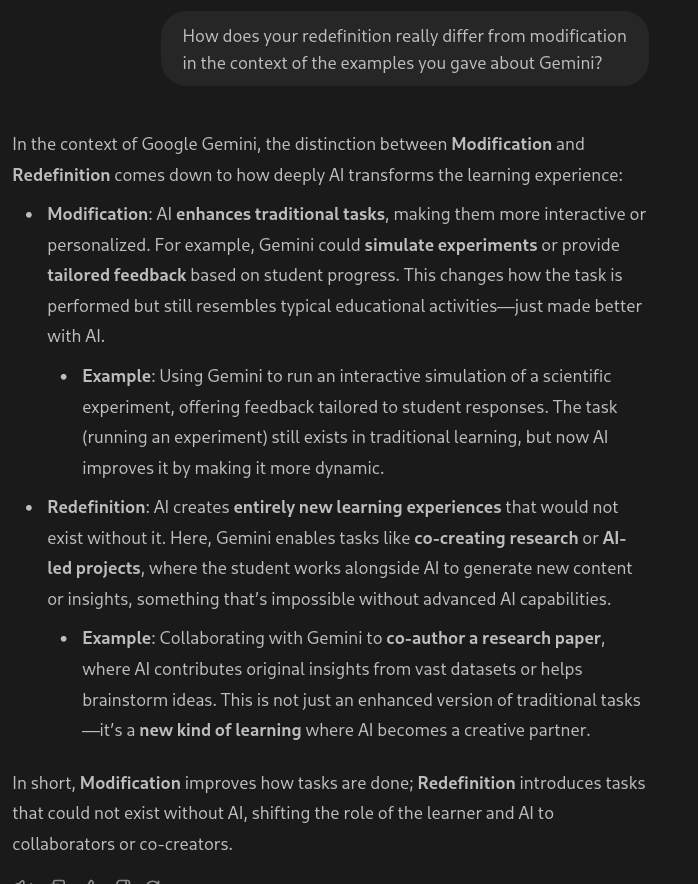

Here I felt ChatGPT still didn’t make further strides. I felt that the redefinition and modification examples were too similar – so I asked ChatGPT to explain how they were different in their eyes…

Fig. 6

… and I think ChatGPT did a great job of explaining the differences finally. I think much of this was my fault though as the one who gave the prompt, rather than the fault of the AI tool itself. My prompt was forcing ChatGPT to be brief, but once that restriction was removed ChatGPT was able to transform the vague uses of Gemini with respect to SAMR into more concrete examples.

Conclusions

I think overall my opinions on AI tools were just further verified by my experiences. The largest thing that matters to get useful results is a useful input… garbage in, garbage out. There are limitation to this however – tools usually don’t have access to the news and aren’t up to date on anything happening in the now – yet still they have to produce a result, so they will create one based on the knowledge they do possess… effectively being their own source for garbage in, garbage out. AI tools are fallible, but useful. As long as students aren’t using these tools as a source of answers I find them to be great for the educational experience.

I could go on, and on, and on, and on…. but I think I have to end somewhere. Hopefully this wasn’t too little or too much! Let me know your thoughts on my experiences!

AI Citations

Fig. 1 “Draw me a cat in the style of the camel from the Joe Camel cigarette cartoons in the 1900s” prompt ChatGPT, OpenAI, 11 Oct. 2024, https://chatgpt.com/

Fig. 2 “Is it possible to make the cat be smoking? Can you also make a cigarette brand called ‘Cat’ and have it be an advert for that?” prompt ChatGPT, OpenAI, 11 Oct. 2024, https://chatgpt.com/

Fig. 3 Given image of Fig. 2 prompt Sketch, Meta, 11 Oct. 2024, https://sketch.metademolab.com/

Fig. 4 “Do a SAMR analysis of the use of AI in learning on Google Gemini. Make it very, very succinct but don’t leave out details, brief but accurate.” prompt ChatGPT, OpenAI, 11 Oct. 2024, https://chatgpt.com/

Fig. 5 “Can you dig deeper on the redefinition aspect? I still want it very, very, very brief… I just think you haven’t really found a food way it is redefining education.” prompt ChatGPT, OpenAI, 11 Oct. 2024, https://chatgpt.com/

Fig. 6 “How does your redefinition really differ from modification in the context of the examples you gave about Gemini?” prompt ChatGPT, OpenAI, 11 Oct. 2024, https://chatgpt.com/

Recent Comments